How Hybrid Mesh unlocks dbt collaboration at scale

One of the most important things that dbt does is unlock the ability for teams to collaborate on creating and disseminating organizational knowledge.

In the past, this primarily looked like a team working in one dbt Project to create a set of transformed objects in their data platform.

As dbt was adopted by larger organizations and began to drive workloads at a global scale, it became clear that we needed mechanisms to allow teams to operate independently from each other, creating and sharing data models across teams — dbt Mesh.

dbt Mesh is powerful because it allows teams to operate independently and collaboratively, each team free to build on their own but contributing to a larger, shared set of data outputs.

The flexibility of dbt Mesh means that it can support a wide variety of patterns and designs. Today, let’s dive into one pattern that is showing promise as a way to enable teams working on very different dbt deployments to work together.

How Hybrid Mesh enables collaboration between dbt Core and dbt Cloud teams

Scenario — A company with a central data team uses dbt Core. The setup is working well for that team. They want to scale their impact to enable faster decision-making, organization-wide. The current dbt Core setup isn't well suited for onboarding a larger number of less-technical, nontechnical, or less-frequent contributors.

The goal — Enable three domain teams of less-technical users to leverage and extend the central data models, with full ownership over their domain-specific dbt models.

-

Central data team: Data engineers comfortable using dbt Core and the command line interface (CLI), building and maintaining foundational data models for the entire organization.

-

Domain teams: Data analysts comfortable working in SQL but not using the CLI and prefer to start working right away without managing local dbt Core installations or updates. The team needs to build transformations specific to their business context. Some of these users may have tried dbt in the past, but they were not able to successfully onboard to the central team's setup.

Solution: Hybrid Mesh — Data teams can use dbt Mesh to connect projects across dbt Core and dbt Cloud, creating a workflow where everyone gets to work in their preferred environment while creating a shared lineage that allows for visibility, validation, and ownership across the data pipeline.

Each team will fully own its dbt code, from development through deployment, using the product that is appropriate to their needs and capabilities while sharing data products across teams using both dbt Core and dbt Cloud.

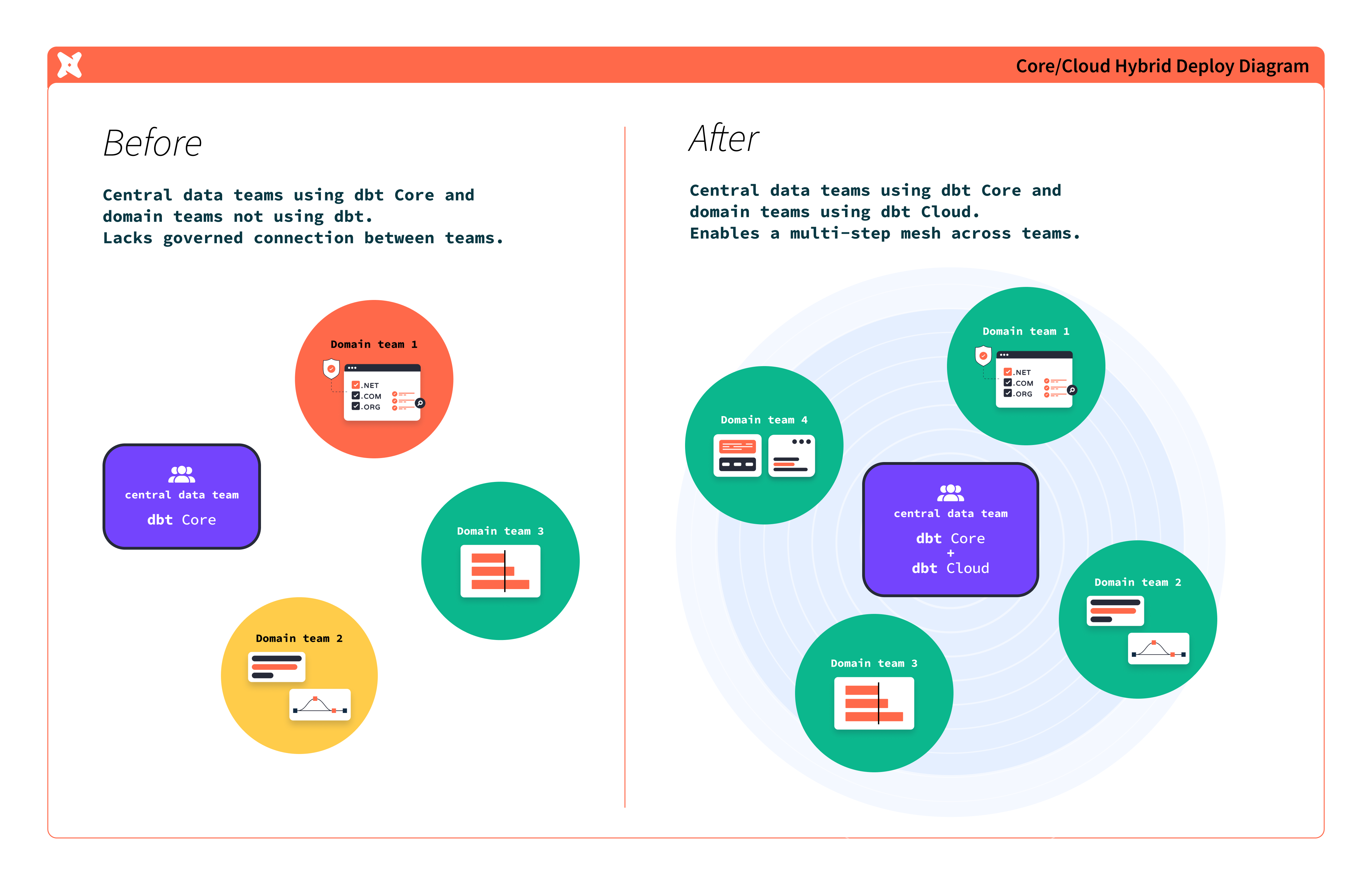

A before and after diagram highlighting how a Hybrid Mesh allows central data teams using dbt Core to work with domain data teams using dbt Cloud.

A before and after diagram highlighting how a Hybrid Mesh allows central data teams using dbt Core to work with domain data teams using dbt Cloud.Creating a Hybrid Mesh is mostly the same as creating any other dbt Mesh workflow — there are a few considerations but mostly it just works. We anticipate it will continue to see adoption as more central data teams look to onboard their downstream domain teams.

A Hybrid Mesh can be adopted as a stable long-term pattern, or as an intermediary while you perform a migration from dbt Core to dbt Cloud.

How to build a Hybrid Mesh

Enabling a Hybrid Mesh is as simple as a few additional steps to import the metadata from your Core project into dbt Cloud. Once you’ve done this, you should be able to operate your dbt Mesh like normal and all of our standard recommendations still apply.

Step 1: Prepare your Core project for access through dbt Mesh

Configure public models to serve as stable interfaces for downstream dbt Projects.

- Decide which models from your Core project will be accessible in your Mesh. For more information on how to configure public access for those models, refer to the model access page.

- Optionally set up a model contract for all public models for better governance.

- Keep dbt Core and dbt Cloud projects in separate repositories to allow for a clear separation between upstream models managed by the dbt Core team and the downstream models handled by the dbt Cloud team.

Step 2: Mirror each "producer" Core project in dbt Cloud

This allows dbt Cloud to know about the contents and metadata of your project, which in turn allows for other projects to access its models.

- Create a dbt Cloud account and a dbt project for each upstream Core project.

- Note: If you have environment variables in your project, dbt Cloud environment variables must be prefixed with

DBT_(includingDBT_ENV_CUSTOM_ENV_orDBT_ENV_SECRET). Follow the instructions in this guide to convert them for dbt Cloud.

- Note: If you have environment variables in your project, dbt Cloud environment variables must be prefixed with

- Each upstream Core project has to have a production environment in dbt Cloud. You need to configure credentials and environment variables in dbt Cloud just so that it will resolve relation names to the same places where your dbt Core workflows are deploying those models.

- Set up a merge job in a production environment to run

dbt parse. This will enable connecting downstream projects in dbt Mesh by producing the necessary artifacts for cross-project referencing.- Note: Set up a regular job to run

dbt buildinstead of using a merge job fordbt parse, and centralize your dbt orchestration by moving production runs to dbt Cloud. Check out this guide for more details on converting your production runs to dbt Cloud.

- Note: Set up a regular job to run

- Optional: Set up a regular job (for example, daily) to run

source freshnessanddocs generate. This will hydrate dbt Cloud with additional metadata and enable features in dbt Explorer that will benefit both teams, including Column-level lineage.

Step 3: Create and connect your downstream projects to your Core project using dbt Mesh

Now that dbt Cloud has the necessary information about your Core project, you can begin setting up your downstream projects, building on top of the public models from the project you brought into Cloud in Step 2. To do this:

-

Initialize each new downstream dbt Cloud project and create a

dependencies.ymlfile. -

In that

dependencies.ymlfile, add the dbt project name from thedbt_project.ymlof the upstream project(s). This sets up cross-project references between different dbt projects:# dependencies.yml file in dbt Cloud downstream project

projects:

- name: upstream_project_name -

Use cross-project references for public models in upstream project. Add version to references of versioned models:

select * from {{ ref('upstream_project_name', 'monthly_revenue') }}

And that’s all it takes! From here, the domain teams that own each dbt Project can build out their models to fit their own use cases. You can now build out your Hybrid Mesh however you want, accessing the full suite of dbt Cloud features.

- Orchestrate your Mesh to ensure timely delivery of data products and make them available to downstream consumers.

- Use dbt Explorer to trace the lineage of your data back to its source.

- Onboard more teams and connect them to your Mesh.

- Build semantic models and metrics into your projects to query them with the dbt Semantic Layer.

Conclusion

In a world where organizations have complex and ever-changing data needs, there is no one-size fits all solution. Instead, data practitioners need flexible tooling that meets them where they are. The Hybrid Mesh presents a model for this approach, where teams that are comfortable and getting value out of dbt Core can collaborate frictionlessly with domain teams on dbt Cloud.

Comments